Gall Of A Red Ox

For Row Store indexes — Columnstore is different and should be maintained differently — rebuilding indexes gets prescribed as a cure for performance issues of all types.

- Sudden query slowdown? Rebuild’em!

- Blocking? Rebuild’em!

- High CPU? Rebuild’em!

- No idea what’s going on? Rebuild’em!

While an index rebuild will update stats, which is sometimes beneficial, it’s a lot easier to, you guessed it, just update stats.

The main problem is that your index rebuild script is probably measuring the wrong thing.

It’ll have the same net effect on plan invalidation, with a heck of a lot of less resource usage and, potentially, blocking.

Bear Bile

It’s my opinion, and you can take it or leave it, that index rebuilds should be reserved for special circumstances.

- You deleted a lot of data

- You need to change something about the index

- You have a Heap with a lot of forwarded fetches

But why is that my opinion? What evidence has informed it? No, it’s not just because I like to be disagreeable. It’s mostly that I went through a fairly normal progression.

- A lot of people are talking about index fragmentation, sounds bad!

- Hey, I think this fixed something? I’m gonna keep doing it.

- Well, I still have problems, but at least I don’t have fragmentation.

- I don’t have enough time to run CHECKDB, I need to rebuild less often.

- No one seems to be complaining when I don’t rebuild indexes…

- My problems had nothing to do with index fragmentation!

Here’s How To Order

But what metrics might an index rebuild fix, and why was so much fuss made about them for so long?

To test this, and to get you, dear reader, an explanation, I set up some tests.

First, I created a simple index.

CREATE INDEX ix_whatever ON dbo.Users (Reputation);

Then, I wrote queries that will touch 1%, 10%, and 100% of the Users table (2013 version, 2.4mm rows).

SELECT COUNT(*) AS records FROM dbo.Users AS u WHERE u.Reputation = 289 AND 1 = (SELECT 1); SELECT COUNT(*) AS records FROM dbo.Users AS u WHERE u.Reputation BETWEEN 100 AND 450 AND 1 = (SELECT 1); SELECT COUNT(*) AS records FROM dbo.Users AS u WHERE 1 = (SELECT 1);

After that, it’s a matter of introducing the “harmful” kind of fragmentation — empty space on pages.

This is the kind of fragmentation that bloats your indexes, both on disk and in memory, leaving less room for other things.

ALTER INDEX ix_whatever ON dbo.Users REBUILD WITH(FILLFACTOR = 5); ALTER INDEX ix_whatever ON dbo.Users REBUILD WITH(FILLFACTOR = 10); ALTER INDEX ix_whatever ON dbo.Users REBUILD WITH(FILLFACTOR = 20); ALTER INDEX ix_whatever ON dbo.Users REBUILD WITH(FILLFACTOR = 30); ALTER INDEX ix_whatever ON dbo.Users REBUILD WITH(FILLFACTOR = 40); ALTER INDEX ix_whatever ON dbo.Users REBUILD WITH(FILLFACTOR = 50); ALTER INDEX ix_whatever ON dbo.Users REBUILD WITH(FILLFACTOR = 60); ALTER INDEX ix_whatever ON dbo.Users REBUILD WITH(FILLFACTOR = 70); ALTER INDEX ix_whatever ON dbo.Users REBUILD WITH(FILLFACTOR = 80); ALTER INDEX ix_whatever ON dbo.Users REBUILD WITH(FILLFACTOR = 90); ALTER INDEX ix_whatever ON dbo.Users REBUILD WITH(FILLFACTOR = 100);

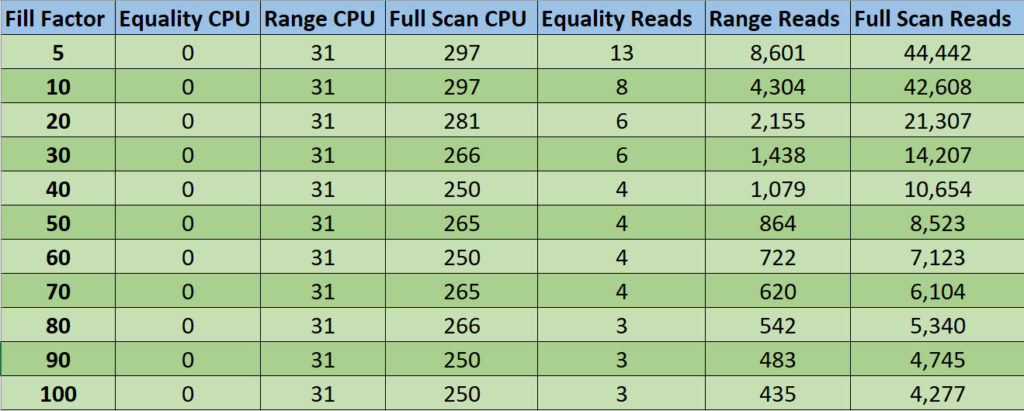

Now, since I don’t wanna take that many screenshots, I embraced my long-lost ENTJ and made an Excel table.

Not surprisingly, the chief metric that went down as fill factor went up is reads. CPU didn’t change much, if at all.

That being said, you have to be real goofy about fill factor to get reads to matter.

Within a sane boundary of fill factors, which I’d call 70 to 100, ask yourself if any variation there is the root cause of your performance problems.

Now, I know you. It’s hard to talk you out of your superstitions, and you’re gonna keep thrashing that expensive SAN with constant I/O nonsense.

But maybe I can talk you into raising the threshold that you do it at. Maybe I can get you to not rebuild (or reorg) your indexes until they hit >70% fragmentation. That’s 30% fill factor on the chart.

Virgin Wax

Alright, now we know that rebuilds help reads. When would number of reads matter most?

- If your disks are the quite elderly spinning variety

- And you don’t have enough memory to cache your hot data

- And maybe your hot data could fit into memory if were defragmented to 100% fill factor

You can kinda start to put a picture together of when it mattered. Before SSDs, before 64bit OSes (32b capped out at ~3GB RAM), and well before flash storage, etc.

Way back when, rebuilding made sense. Reading unordered data could have a big impact on a workload because disks would have to physically move to find unordered/spread out data.

So yeah, if you notice that your workload is doing a lot more reads over time, and rebuilding at 100% fill factor reduces reads, it might be worth rebuilding at some point. But to solve any other common workload problems, look somewhere else. Rebuilding indexes is not the cure.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.

Great post. I hate it when common sense and pragmatism get in the way of my preconceived ideas and bad habits.;)

Peet — my pleasure!

Erik,

So I’ve (almost) stopped rebuilding indexes, and I’m thinking about stopping the Update Stats job.

How often would you recommend updating stats?

Best regards

Henrik

I would continue to update statistics, at least nightly (just taking a blind guess).

There have been times when updating stats more or less frequently also made sense, too, depending on data volatility and parameter sensitivity.

Thanks!

We are rebuilidng indexes on daily basis as a sql agent job , if % avg fragmentation > 30% AND PAGE COUNT > 500 , hence there are around 300 indexes where % avg frag is > 80%

As they havent got defragmented as page count hasn’t reached 500 yet.

Is that a good practice ??

Shaggy — Maybe if you’re on hardware from 2005. I wouldn’t touch an index with < 75% fragmentation, and <50,000 pages. Thanks, Erik