Rowgroup elimination is a performance optimization based on compressed rowgroup metadata that can allow rowgroups to be skipped during query execution. It’s likely that all of the metadata used for the optimization is exposed in the sys.column_store_segments DMV. This blog post explores some of the less well known rules and limitations for rowgroup elimination.

Test Data

To keep things very simple we’ll build 100 rowgroups with exactly 1 million rows in each of them. ID and ID2 increase from 1 to 10000000 and ID_NULL is always NULL. Code to create and populate the table:

DROP TABLE IF EXISTS dbo.MILLIONAIRE_CCI; CREATE TABLE dbo.MILLIONAIRE_CCI ( ID BIGINT NULL, ID2 BIGINT NULL, ID_NULL BIGINT NULL, INDEX CCI_MILLIONAIRE_CCI CLUSTERED COLUMNSTORE ); DECLARE @loop INT = 0; BEGIN SET NOCOUNT ON; WHILE @loop < 100 BEGIN INSERT INTO dbo.MILLIONAIRE_CCI WITH (TABLOCK) SELECT t.RN, t.RN, NULL FROM ( SELECT TOP (1000000) (1000000 * @loop) + ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) RN FROM master..spt_values t1 CROSS JOIN master..spt_values t2 ORDER BY RN ) t OPTION (MAXDOP 1); SET @loop = @loop + 1; END; END;

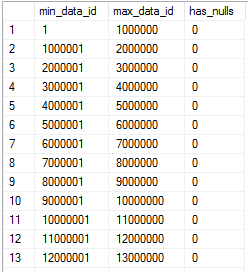

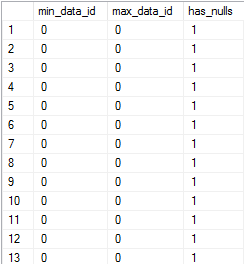

We can expect very good rowgroup elimination on the ID and ID2 columns based on how we built them. That can be verified by calculating the REFF or by looking at sys.column_store_segments:

Code to generate the above result set:

SELECT css.min_data_id, css.max_data_id, css.has_nulls

FROM sys.objects o

INNER JOIN sys.columns c ON o.object_id = c.object_id

INNER JOIN sys.partitions p ON o.object_id = p.object_id

INNER JOIN sys.column_store_segments css

ON p.hobt_id = css.hobt_id

AND css.column_id = c.column_id

INNER JOIN sys.dm_db_column_store_row_group_physical_stats s

ON o.object_id = s.object_id

AND css.segment_id = s.row_group_id

AND s.partition_number = p.partition_number

WHERE o.name = 'MILLIONAIRE_CCI'

AND c.name = 'ID'

AND s.[state] = 3

ORDER BY css.min_data_id, css.segment_id;

Many of the test queries below select a single aggregate value. This isn’t done for any special reason other than to limit the size of the result set. The easiest way to see how many rowgroups were skipped is to use SET STATISTICS IO ON and that requires that the results be returned to the client.

Single Column Filtering

Consider the following query:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID IN (1000000, 2000001);

Based on how we built the segments for the ID column we might expect that only two segments will need to be read: segment 1 with ID values of 1-1000000 and segment 3 with ID values of 2000001-3000000. As usual, SQL Server does not care about our expectations:

Table ‘MILLIONAIRE_CCI’. Segment reads 3, segment skipped 97.

Why did the storage engine scan two segments instead of three? Running another test makes the problem more clear:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID IN (1, 100000000);

For this query we end up scanning the entire table:

Table ‘MILLIONAIRE_CCI’. Segment reads 100, segment skipped 0.

It seems as if the query optimizer reduces the predicate against the filtered column to be a range of IDs. That range of IDs is used for rowgroup elimination. In some cases it’s possible to write a WHERE clause that won’t return any rows but still isn’t eligible for rowgroup elimination. The storage engine is not able to skip any segments while executing the below query:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID < 0 OR ID > 100000000;

There isn’t an issue when the where clause is filtering on a contiguous range. For example, the following query skips 98 segments as expected:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID BETWEEN 1 AND 2000000;

There also isn’t an issue when filtering down to multiple values as long as those values are sufficiently close together, as shown with the first example. I also wasn’t able to find any liminations around the number of values in the IN clause. The query below reads 1 segment and skips 99 as we might hope:

SELECT MAX(l.ID) FROM dbo.MILLIONAIRE_CCI l WHERE l.ID IN ( 1, 2, 3, 4, 5, 6, 7, 8, 9, 10 , 11, 12, 13, 14, 15, 16, 17, 18, 19, 20 , 21, 22, 23, 24, 25, 26, 27, 28, 29, 30 , 31, 32, 33, 34, 35, 36, 37, 38, 39, 40 , 41, 42, 43, 44, 45, 46, 47, 48, 49, 50 , 51, 52, 53, 54, 55, 56, 57, 58, 59, 60 , 61, 62, 63, 64 );

If we add one more filter value then the query optimizer changes the plan to use a join:

The above query is eligible for rowgroup elimination but it follows slightly different ruless as covered later in this post.

It is possible to disable the transformation to a join by using the undocumented query hint QueryRuleOff SelToLSJ. With 976 entries in the IN clause I still get rowgroup elimination as expected. With 977 entries nothing was pushed to the scan at all, and we get a truly horrible plan:

This doesn’t appear to be a columnstore limitation. The same behavior can be observed with a clusted rowstore index.

Getting back on track, the internal calculation around which rowgroups to skip isn’t always as simple as calculating the minimum and maximum in the range and using those values to do elimination. It’s possible to end up with no rowgroup elimination even when the maximum and minimum ID in the WHERE clause are close to each other. Consider the following query:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID BETWEEN 1 AND 2 OR ID BETWEEN 2 AND 3;

The storage engine only has to read a single segment. We can see in the query plan that the optimizer was able to simplify the expression into something that happens to qualify for rowgroup elimination:

Now consider the following query:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID BETWEEN 1 AND 2 OR ID BETWEEN 3 AND 4;

It would be helpful if the query optimizer changed the predicate to ID BETWEEN 1 AND 4 when doing calculations around which rowgroups can be skipped. This does not happen, and as a result all 100 rowgroups are scanned. Rowgroup elimination won’t be available when the WHERE clause is a sufficiently complicated mix of AND and OR conditions, even when filtering on just one column.

NULLs

Information about NULLs is stored internally and can be used for rowgroup elimination. SQL Server knows that none of the compressed segments for the ID column contain NULL, so the storage engine can skip all 100 segments for the following query:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID IS NULL;

Naturally, reversing the filter for this query will require the storage engine to scan the entire table.

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID IS NOT NULL;

We might expect that query to skip all segments if we change the filter column to ID_NULL. All rows in the rowgroups for ID_NULL are NULL and SQL Server ought to be aware of that fact. However, the storage engine still scans the entire table even for the query below:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID_NULL IS NOT NULL;

The DMV for ID_NULL doesn’t look as we might expect:

sys.column_store_dictionaries has a value of 0 for the entry_count column. It seems likely that the fact that the segments only contain NULL can be deduced from information already tracked by SQL Server. Rowgroup elimination for IS NOT NULL may have not been added because it was thought to be too unlikely of a use case.

Filters on Multiple Columns

To state it simply, rowgroup elimination can work quite well with AND predicates on different columns. It will not work with OR predicates on different columns unless the query optimizer can simplify the expression to something that’s eligible for rowgroup elimination.

The following queries are all able to skip 99 rowgroups:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID = 1 AND ID2 = 1; SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID BETWEEN 1 AND 2 AND ID2 BETWEEN 3 AND 4; SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID BETWEEN 1 AND 100000000 AND ID2 BETWEEN 1000001 AND 2000000;

This query skips all 100 rowgroups:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID = 1 AND ID2 = 1000001;

The storage engine doesn’t take the union of rowgroups that could be relevant. It instead takes the intersection, so adding AND predicates won’t increase the number of segments scanned, unless perhaps if you do something very unreasonable. The following query scans one rowgroup as expected:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID BETWEEN 1 AND 100000000 AND ID2 BETWEEN 1000001 AND 2000000 AND ID > ID2;

The final part of the WHERE clause is implemented in a filter operator. The rest of the WHERE clause remains eligible for rowgroup elimination.

Now let’s try a simple query with an OR predicate:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID = 1 OR ID2 = 1;

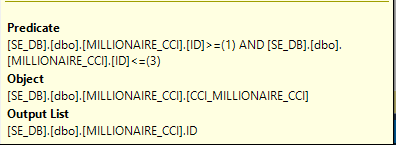

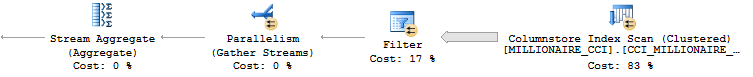

We might hope that the storage engine is able to deduce that only the first segment is relevant. Instead, rowgroup elimination isn’t even attempted. The predicate is implemented as a filter:

The only situation with OR filters that I’ve found to work with rowgroup elimination is when the optimizer can eliminate one of them. For example, the following query scans 5 segments because the optimizer is able to eliminate the condition on the ID2 column:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID IN (1, 5000000) OR ID2 BETWEEN 1 AND 0;

Joins

The query optimizer is able to copy predicates when filtering and joining on the same column. The copied predicates are eligible for rowgroup elimination. Consider the query below:

SELECT MAX(l1.ID) FROM dbo.MILLIONAIRE_CCI l1 INNER JOIN dbo.MILLIONAIRE_CCI l2 ON l1.ID = l2.ID WHERE l1.ID BETWEEN 1 AND 1000000;

Only two segments are read because the filter on ID can be applied to both sides of the join. The same behavior can be observed when forcing a merge join. Loop join is a bit different. As covered in the post on CCI string aggregation, rowgroup elimination does not occur on the inner side of a loop. Consider the following query:

SELECT MAX(l1.ID) FROM dbo.MILLIONAIRE_CCI l1 INNER JOIN dbo.MILLIONAIRE_CCI l2 ON l1.ID = l2.ID WHERE l1.ID BETWEEN 1 AND 1000 OPTION (LOOP JOIN, NO_PERFORMANCE_SPOOL);

The inner side is scanned 1000 times and the outer side is scanned once. The filter on ID allows all segments to be skipped besides one. So we should read 1001 segments and skip 1001 * 100 – 1001 = 99099 segments. This is what happens:

Table ‘MILLIONAIRE_CCI’. Segment reads 1001, segment skipped 99099.

More segments will be read depending on how many rowgroups the filter crosses. Suppose that we include rows with an ID that’s between 999501 and 1000500:

SELECT MAX(l1.ID) FROM dbo.MILLIONAIRE_CCI l1 INNER JOIN dbo.MILLIONAIRE_CCI l2 ON l1.ID = l2.ID WHERE l1.ID BETWEEN 999501 AND 1000500 OPTION (LOOP JOIN, NO_PERFORMANCE_SPOOL);

Now each scan on both the inner and outer side will need to read two segments:

Table ‘MILLIONAIRE_CCI’. Segment reads 2002, segment skipped 98098.

It’s possible to get rowgroup elimination even when filtering and joining on different columns. Consider the following query that joins on ID but filters on ID2:

SELECT MAX(l1.ID) FROM dbo.MILLIONAIRE_CCI l1 INNER JOIN dbo.MILLIONAIRE_CCI l2 ON l1.ID = l2.ID WHERE l1.ID2 BETWEEN 1 AND 1000000;

We still get rowgroup elimination against both sides of the join:

Table ‘MILLIONAIRE_CCI’. Segment reads 2, segment skipped 198.

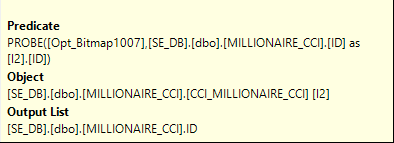

The key is the optimized bitmap:

That allows rowgroup elimination to happen on both sides. Bitmap optimization can only occur with hash joins, so queries written in this way that do a merge or loop join won’t be able to take advantage of rowgroup elimination against both tables.

Less Reasonable Queries

Below is a set of sometimes unreasonable queries to test some of the limits around rowgroup elimilation. It was surprising how often the queries remained eligible for rowgroup elimination. For example, local variables seem to cause no issues, even without PEO. The following query reads just one segment:

DECLARE @ID_FILTER BIGINT = 1; SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID = @ID_FILTER;

Data type conversions on the filtered expression don’t make the query ineligible for rowgroup elimination:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID = '1';

Casting on the filtered column is going to prevent rowgroup elimination. As will “optimizer tricks” like adding zero to the column:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID + 0 = 1;

We read all rowgroups:

Table ‘MILLIONAIRE_CCI’. Segment reads 100, segment skipped 0.

The query below is eligible for rowgroup elimination:

SELECT MAX(ID) FROM dbo.MILLIONAIRE_CCI WHERE ID <= CEILING(RAND());

Using scalar UDFs in queries is a terrible idea, but let’s create one for testing purposes:

CREATE OR ALTER FUNCTION dbo.CHEAP_UDF() RETURNS BIGINT AS BEGIN RETURN 1; END;

As you might expect, the following query runs without parallelism and cannot skip any segments:

SELECT MAX(l.ID) FROM dbo.MILLIONAIRE_CCI l WHERE l.ID = dbo.CHEAP_UDF();

However, if we add SCHEMABINDING to the function definition then we get rowgroup elimination:

Table ‘MILLIONAIRE_CCI’. Segment reads 1, segment skipped 99.

The query below gets rowgroup elimination with and without SCHEMABINDING:

SELECT MAX(l.ID) FROM dbo.MILLIONAIRE_CCI l WHERE l.ID = (SELECT MAX(dbo.CHEAP_UDF()));

Query Rewrites for Better Rowgroup Elimination

In some cases it’s possible to rewrite queries to get better rowgroup elimination. This requires knowing your data and awareness of the rules around rowgroup elimination. Going back to an earlier example, the following query isn’t eligible for rowgroup elimination (without very convenient constraints):

SELECT * FROM dbo.MILLIONAIRE_CCI WHERE ID = 1 OR ID2 = 1;

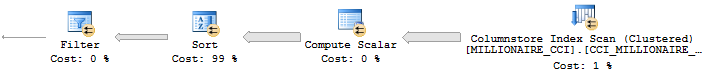

It can be written to use UNION or UNION ALL. Here’s the UNION query:

SELECT * FROM dbo.MILLIONAIRE_CCI WHERE ID = 1 UNION SELECT * FROM dbo.MILLIONAIRE_CCI WHERE ID2 = 1;

Now the storage engine skips 198 segments and only reads 2:

Table ‘MILLIONAIRE_CCI’. Segment reads 2, segment skipped 198.

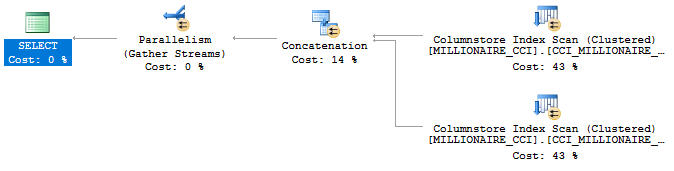

In some cases it may be advantageous to avoid the sort. The query below has the same rowgroup elimination:

SELECT * FROM dbo.MILLIONAIRE_CCI WHERE ID = 1 UNION ALL SELECT * FROM dbo.MILLIONAIRE_CCI WHERE ID2 = 1 AND ID <> 1;

Here’s the query plan:

Consider another query with a wide range of values in the IN clause, but filtered against a single column. The query below won’t be able to skip any rowgroups because we’re including the minimum and maximum value of ID in the query’s results:

SELECT * FROM dbo.MILLIONAIRE_CCI WHERE ID IN ( 1, 2, 3, 4, 5, 6, 7, 8, 9, 10 , 100000000 );

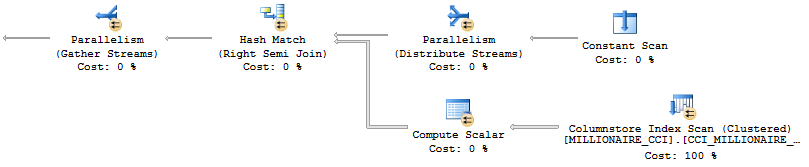

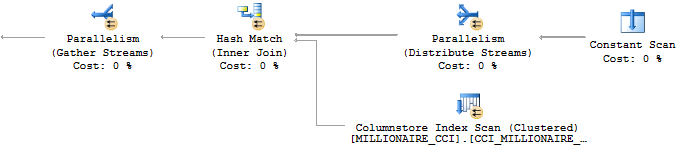

It may be impractical to write out the query using UNION. Instead, we can essentially force a join by putting the filter values into a derived table. The optimizer is likely to move the IN list to a constant scan and do a hash join to the CCI. We can get rowgroup elimination through the bitmap filter on the hash join. Here’s one way to rewrite the query:

SELECT c.* FROM ( VALUES (1), (2), (3), (4), (5) , (6), (7), (8), (9), (10) , (100000000) ) v(x) INNER JOIN dbo.MILLIONAIRE_CCI c ON c.ID = v.x;

Here’s the plan:

As expected, we only need to scan 2 rowgroups:

Table ‘MILLIONAIRE_CCI’. Segment reads 2, segment skipped 98.

SQL Server 2017 Changes

I ran all of the test queries against SQL Server 2017 RC2. I was not able to observe any differences. It may be that Microsoft did not choose to make improvements in this area, or any improvements were missed by my test cases.

Final Thoughts

Rowgroup elimination seems designed to reduce IO requirements for queries that filter against contiguous ranges against a column, like filtering against a single month of data from a table, or when joining to the CCI through a hash join. It’s possible to write queries for which rowgroup elimination does not occur, even though SQL Server in theory has all of the information that it would need to perform rowgroup elimination. From a practical point of the view, the biggest limitation is probably around OR logic.

Thanks for reading!

Going Further

If this is the kind of SQL Server stuff you love learning about, you’ll love my training. I’m offering a 75% discount to my blog readers if you click from here. I’m also available for consulting if you just don’t have time for that and need to solve performance problems quickly.